As one of Denver’s Fast 50, we’ve had a front-row seat to a growing trend: the consultancies gaining the most ground are those leading with an engineering-first model, and those best positioned to help enterprises achieve trusted AI at scale.

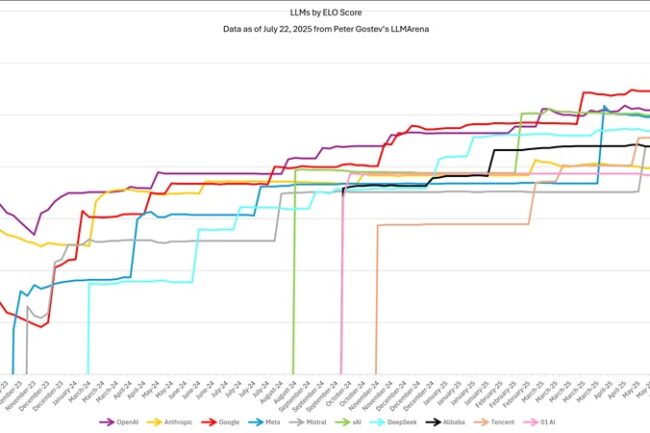

Since their launch in 2022, LLMs have evolved rapidly from theoretical concepts to mainstream commercial tools with a wide range of use cases. In less than three years, an era of explosive commoditization characterized by a surge of new entrants and a lack of differentiation across platforms has resulted in an intense battle for market share.

When “Good Enough” Isn’t Enough

At first glance, this seems like the endgame: a race to the bottom where only scale and compute efficiency matter. However, recent moves by OpenAI to stand up a consulting practice suggest the market is entering a second act that tracks closely with Clayton Christensen’s disruption theory. In Christensen’s framework, disruptive technologies begin as low-margin, “good enough” solutions that initially compete on cost rather than quality. Over time, as the technology matures and performance converges, companies move upmarket, or deliver more specialized and high value offerings built around the commoditized technology.

Open AI Competing on Expertise, not just Models

OpenAI’s move into consulting is a natural progression of this pattern. Reports indicate that OpenAI’s consulting engagements will start at around $10 million and involve deploying experts to customize GPT-4o for a client’s data, operations, and security needs, putting the company in more direct competition with firms like Palantir, Accenture, and major systems integrators. To make the jump, OpenAI will need to tailor its solutions for specific industries, positioning itself not just as a model provider but as a strategic partner. This shift signals that the real value of LLMs will no longer lie in the base models themselves, which are becoming interchangeable, but in the expertise and domain-specific applications layered on top of them.

Despite the rapid advancement of LLMs, integrating them into the workflows of large, regulated, risk-averse organizations remain a significant challenge. In large organizations, value creation depends on deeply customized applications governed by complex business rules and domain-specific expertise. Christensen’s Jobs to Be Done (JTBD) idea offers a useful lens: enterprises “hire” technology and consultants to arrive at tailored insights that align with their strategic goals, operational constraints, and compliance requirements. In their current state, LLMs lack the domain-specific depth and technical precision required for completing the JTBD. Some key pitfalls and risks include:

- Excessive system access: To operate effectively, AI agents require sweeping, often root-level access across calendars, files, communication platforms, and backend systems. While this collapse of traditional silos may enable more intelligent decision-making, it also concentrates risk, creating a single point of failure that exposes sensitive infrastructure to breaches, accidental misuse, or cascading systemic errors. In regulated industries, this level of access poses serious compliance and auditability challenges.

- Privacy & surveillance: Deploying LLMs or agentic AI within enterprise environments frequently requires decrypting sensitive data and transferring it to external, often cloud-based processing environments. This introduces surveillance risks, weakens protections tied to data residency or jurisdiction, and creates legal and regulatory exposure. Without policy-aware infrastructure, enterprises risk violating privacy commitments and falling short of data governance standards like GDPR or HIPAA.

- Breakdown of app-level security: AI agents often cut across previously siloed applications to extract and combine information, overriding traditional app-level permissions and safeguards. This can inadvertently expose confidential data, bypass embedded security controls, and make it harder to contain or trace incidents. Once those boundaries are blurred, organizations lose the layered security model that traditionally insulated sensitive operations.

- Loss of human agency: As AI systems automate increasingly complex tasks, human oversight can diminish. Decisions that used to involve multiple human touchpoints – especially in high-stakes, regulated settings, risk becoming fully delegated to autonomous systems. This not only introduces compliance risks but also creates confusion around accountability, as it’s often unclear who authorized what when a machine acts independently.

- Opaque, untraceable reasoning: Most LLMs operate as black boxes, generating outputs based on probabilistic associations rather than transparent logic. In high-stakes environments, this makes it difficult to explain how a decision was made, justify actions to regulators or clients, or even detect when something has gone wrong. Without mechanisms for traceability and explainability, trust in AI systems erodes quickly.

- Data integrity drift: By merging disparate datasets to generate outputs, AI systems can unintentionally corrupt meaning, misinterpret context, or surface outdated or contradictory information. This drift in data integrity not only undermines decision quality but can lead to flawed insights, reputational harm, or regulatory violations – especially when outputs are assumed to be authoritative without human validation.

These risks don’t just limit performance, they erode trust. Until LLMs can operate with precision, transparency, and control, they will remain on the periphery of mission-critical enterprise workflows. Without grounded context, policy-aware architecture, and stakeholder-ready interfaces, these models remain interchangeable and undifferentiated.

Bridging the gap from commoditized tooling to enterprise-grade solutions requires more than scale, it requires connective infrastructure that brings models in line with business context and addresses key risks. G2M’s Overwatch addresses this by embedding a dynamic knowledge graph directly within an LLM, enforcing SOC 2-compliant data handling, and offering interfaces designed for business stakeholders. This architecture enables organizations to operationalize AI with confidence, delivering secure, explainable, and context-rich outputs. The result: transparent reasoning chains grounded in proprietary knowledge, deployed in days rather than months and pushing LLMs firmly into the high-value, high-margin end of the disruption curve.

How Can We Help?

Feel free to check us out and start your free trial at https://app.g2m.ai or contact us below!